Home » Posts tagged 'Kubernetes storage solutions'

Tag Archives: Kubernetes storage solutions

Use Of Kubernetes Metrics Server

For monitoring in Kubernetes, K8S has an integrated monitoring tool metric server. Metrics Server is a series of data sources for resource use.

It collects a metric like a CPU or memory usage for a container or node from an advisor, which points to each node. Metrics Server is a resource resource metric that can be scaled and efficient for the embedded automatic pipeline of the embedded Kubernetes. You can get more information about the best Kubernetees storage solution visit https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

Image Source: Google

The metric server stores all information taken from nodes and modules in memory. This is a storage monitoring solution that does not store disk metrics.

Therefore, historical performance data cannot be displayed. Metric API can be accessed via:

Kubectl top nodes that provide CPU consumption and memory for each node.

Subject on the POD to display the CPU and consumption of memory pod on K8S.

The Kubernetes indicator server is used for Advanced Orchestration Kubernetes as an automatic pod horizontal scaling for automatic arises.

Metrics Server offers:

- One implementation that works in most clusters

- Scalable support for up to 5,000 cluster nodes

- Resource efficiency: metric server using 0.5 m CPU core and 4MB memory per node

- Other Kubernetes Monitoring Tools

With the increasing use of containers and microbead services in the company, monitoring utilities need to handle more services and server instances than before. Although the infrastructure landscape has changed, the operating team still needs to monitor identical CPUs, RAM, file systems, network use, and so on.

As a result, there are third-party tools available on the market that can monitor Kubernetes with a much better log and metric tracking. Let’s look at some of our favorite Open Source tools.

Google Kubernetes Machine: Loading And Operating Mode

As we all know, the GKE works with container applications. This is a packaged application in the Instance Space User of Platform-Independent-Independent, i.e.Using Dockers.

In GKE and Kubernetes, this container, for group applications or assignments, is referred to as total payload. Before placing a workload in the GKE cluster, the user must first package the workload in the container. You can get more information about best Kubernetees storage solution visit https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

Image Source: Google

When someone makes a cluster on the Google Kubernetes machine, they can choose between two operating modes described below:

1.) Standard mode

This is the original operating mode that is included with the GKE and is still used today. This provides user flexibility in the configuration of nodes and full control over clustering management and knot infrastructure. Best for those who want to fully control each small aspect of their experience.

2.) Autopilot mode

In this mode, all clusters’ knot and infrastructure management are carried out by Google, which is a more convenient approach. However, there are several limitations that need to be considered and the option in the current operating system is limited to only two and most features are only available through the CLI.

Kubernetes application

Now we have studied Kubernetes’s work, let’s see what it really does. Kubernetes machines can be used for:

- Make or change the size of the cluster container

- Development of Control Subsystems, Jobs, Services or Load Balancing

- Update and Update Cluster Container

- Solve container cluster problems.

Container Engine For Kubernetes (OKE) Oracle For Beginners

Kubernetes is the most sought-after container in the Kubernetes container machine, sometimes also abbreviated. This is a full-managed service, disabled, and accessible that allows you to host the application in the container in the Cloud. If you want to get more information about the best Kubernetees storage solution visit https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

Image Source: Google

Even though Kubernetes is very useful in the world today, it is now very difficult to configure, manage, and maintain production. As a result, the cloud provider acknowledged this situation and applied several managed Kubernetes services to overcome this problem.

In Kubernetes architecture, the main and functional nodes are controlled by the user. In services operated by Kubernetes, third-party providers manage the main knot and the user manages a functioning node. In addition, Kubernetes manages support and special hosts in the configurable environment. Managed solutions do a lot of this configuration for you.

Oracle Cloud Infrastructure Container Machine for Kubernetes is a fully managed service, can be scaled, and affordable that allows you to use applications in the cloud container. Kubernetes container machine uses Kubernetes, an open-source system that automates the deployment of container applications for deployment, scaling, and host cluster management.

The Oracle Kubernetes service is based on Oracle Cloud infrastructure and uses Ocir to store, share and disable the docker. Both courses are good. In Oracle, there are 3 main nodes and 3 clusters with high availability, etc. Produced in three different data sets in certain regions.

If the third level is a workstation, it can be a virtual machine or empty metal cluster managed by the client. Well, you only pay workers, the poker and intestinal knot is free.

Components Of Amazon Elastic Kubernetes Service

Amazon EKS is a managed service used to start Kubernetes on AWS. EKS users do not need to maintain their own Kubernetes control plan. Used to automate the implementation, scaling and maintenance of containerized applications. If you are looking for the %Link1%.

Image Source: Google

Works with most operating systems:

Node: Node is a physical or virtual machine. Both master and worker nodes are managed by EKS in EKS. There are two types of knots.

Primary node: Main node is a collection of components such as storage, controllers, schedulers, API servers that create the Kubernetes control plan. EKS itself creates and manages master nodes.

API server: Controls the API server, be it the kubernetes (Kubernetes CLI) or the REST API.

etcd: This is a highly accessible key-value repository distributed across the Kubernetes cluster for storing configuration data.

Controller Manager: Cloud controllers are used to manage virtual machines, storage, databases and other resources associated with Kubernetes clusters. This ensures that you are using as many containers as needed at the same time. It regulates the number of containers used and also records their status.

Planner: He defines what and when work needs to be done. Integrated with Controller Manager and API Server.

Workstations: Workstations in the cluster are physical computers or servers on which your applications run. Users are responsible for creating and managing workstations.

Couplet: Controls the alternating flow of the API. This ensures that the container functions inside the capsule.

Kubernetes For Testers And QA

The container is a software package in a box that is not visible with everything to do for the application. These include operating systems, application code, execution time, system tools, system libraries, and more. You can get more information about %Link1%.

Image Source: Google

Container docker contains worker images. Because the image only reads, the docker adds a read-only file system and writes to the read-only file system to make a container.

Kubernetes is an open source platform to manage loads on containers and services. Kubernetes assesses the arises and secures your application running in a container.

After the architect designs the application, the developer applies it to the container. The tester then needs to test it to verify that the application works well and that changes in this application are needed. After this is done, the tester checks this application end-to-end if there is a need to increase or change any components in the cluster.

There are two ways to validate / test clusters:

1) manual test

2) automatic testing

Manual testing:

Manual testing checks each cluster component manually. This includes checking nodes or not, checking the status of subsystems in the cluster, suspending applications for testing services, checking secrets, encryption, security, storage, networking, etc.

Automatic testing:

Smoke test is a type of test that determines whether the compilation is embedded stable or not. This test tests the main features to ensure they work well. The smoke test confirms whether the QA team can continue testing further. Smoke test is the minimum number of tests carried out for each composition.

CKA Certification Exam (Certified Kubernetes Administrator)

With Kubertnet at the forefront of the world of containers, most IT professionals are candidates for Kubernetes certification because of its undeniable value. Let’s go to the good news: Certified Administrator Kubernetes (CKA) is easier from September 2020.

CNCF has eliminated insignificant weight. You can get more information about %Link1%.

Image Source: Google

Certified Certified Administrator Certificate is intended to ensure that the Kubernetes administrator has the skills and knowledge to carry out the task of Administrator Kubernetes.

Why QKA Certification (Certified Kubernetes Administrator):

- This gives you unfair benefits of non-certified professionals.

- Better job prospects and higher salaries.

- Because all companies have or try to improve to K8, now or not.

- Various job offers in it for CKA.

This gives you world recognition about your knowledge, skills, and experience.

CNCF takes intelligent steps and shifts the development of application developers and Certified Certified Application Protection (CKAD) to Kubernetes Certified Security Specialist (CKS). The reason is because CKA focuses more on administration, which makes a lot of sense.

Everyone wants to learn Kubernetes today and the best way to learn it is to do it. In particular, all developers want to hire a Certified Kubernetes Administrator (CKA): practical lab and add the development of Kubernetes application to their experiences.

Deploy Applications With Kubernetes And Docker

The main difference between Kubernetes and Docker is that Kubernetes is designed to run in a cluster while Docker runs on a single node. Kubernetes is broader than docker swarm and aims to efficiently coordinate cluster nodes at a production scale. If you are looking for the best kubernetes storage solutions visit https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

You need to run a container such as Docker Engine to start and stop the container on the host. When multiple containers are running on multiple hosts, you need an orchestrator to manage: Where does the next container start? How do you make the container so accessible? How do you control which containers can communicate with other containers? This is where orchestrators like Kubernetes come into play.

There are several steps involved in deploying a docker Image on a Kubernetes cluster. The whole process might seem a little confusing if you’re new to Kubernetes and Docker, but the setup isn’t difficult. The steps involved in deploying depend on the type of application you are implementing.

Following are the steps for deploying a web application:

- Wrap a sample web application in a Docker image.

- Upload the Docker image to the container registry.

- Create a Kubernetes cluster.

- Deploy the sample application to the cluster.

- Manage deployment auto-scaling.

- Post a sample application on the Internet.

- Apply the new version of the sample application.

How does Kubernetes work with Docker?

You’ll understand by now that Docker helps you “build” containers, whereas K8 lets you “manage” them at runtime. Docker is used to package and run your applications. K8s lets you deploy and scale your applications in the same way. You can get more information about the best kubernetes storage solution via https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

Kubernetes only appears when there are more containers to manage. While the big tech giants are trying to adapt to Kubernetes, small startups shouldn’t need K8 to manage their applications.

However, as the company grows, their infrastructure needs will increase. As a result, the number of containers will increase, which may be difficult to manage. Voila! This is Kubernetes.

Together, docker and K8 serve as digital transformation tools and tools for modern cloud architectures. The use of both has become the new norm in the industry for faster application implementations and releases.

As noted above, just running containers in production isn’t enough, they need to be configured, and Kubernetes has some great features that make working with containers a lot easier. The K8 provides us with automatic scaling, health check and load balancing, which are essential for container life cycle management.

Kubernetes constantly checks implementation status according to the yaml definition. If a docker container falls, K8 will automatically rotate the new one.

In short, docker containers help you isolate and package your software across the country. Kubernetes, on the other hand, helps you with provisioning and managing your containers. The export point is that the combination of docker and Kubernetes increases confidence and productivity for everyone.

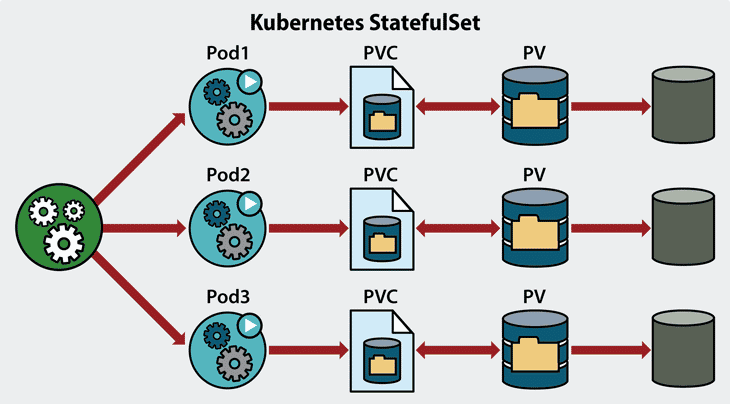

How Can You Manage Your Kubernetes Storage More Easily?

When it comes to the data system, first and foremost (after you save your data) of course how to manage your data. And because so much data is stored in the Kubernetes system, it is important to know how to do it efficiently and with high quality. If you are looking for the best kubernetes storage solution via https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

For example, do you know that when you define pods, you can determine how many CPUs or RAM are needed in every container? When resource requirements are determined in the container itself, call POD.

It is important not to find a better method objectively because, in reality, there are so many options. This depends on personal preferences and trials. You need a system that really meets your needs.

Maybe something by:

- Open-source design.

- permanent storage area

- Data replication in the kernel

- Fast response time

- Low CPU requirements

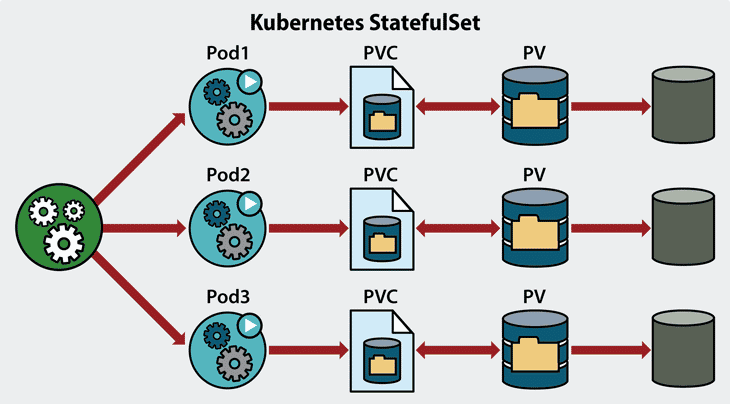

Kubernetes permanent storage

Kubernetes is a container orchestration tool that has become a standard for businesses to store and use data modules. Calling it “revolution” in the way the business application used is ongoing sales, and it is clear that there is no meaning.

This is the next step in the way we use, access, and save our application data. This is above all “evolution”.Expect the logic wall and application infrastructure when you develop microservices architecture.

Developers use what they use so they can focus on relevant work. Abstraction the actual machine that you control. With Kubernetes, you can describe the storage and computing power you want and set the system to use without it.

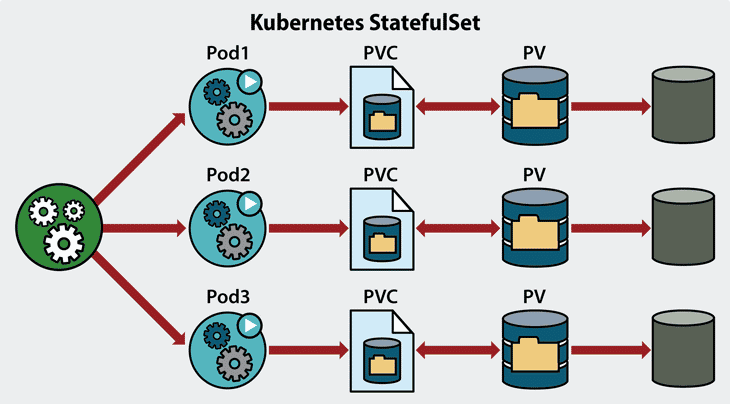

Compare The Best Kubernetes Storage Solutions

Kubernetes revolutionized how the application was developed, deployed, and scaled. While Kubernetes is useful in container management, it does not support container data storage. This means that the storage mechanism must be provided externally on different hosts as needed, and this volume may need a scale when moving like increasing use. You can get the more details about the best kubernete storage solutions via https://kubevious.io/blog/post/comparing-top-storage-solutions-for-kubernetes/.

This article describes and evaluates popular cloud storage solutions. We will start with a quick overview of the cloud storage Tool and why they are needed for Kubernetes.

Simply put, to create data available when the reboot cluster requires a solution or storage mechanism that manages cluster data operations.Cloud storage solutions allow this comprehensive mechanism to store container-based applications and offer solutions to store data in the cloud-based environment.

Cloud storage solutions in this place mimic the characteristics of the Cloud environment. These include scalability, container architecture, and high availability for easy integration into container management platforms and permanent storage provision for container applications.

GlusterFS:

GlusterFS is an excellent open-source project that provides Administrator Kubernetes with a mechanism for deploying their own storage services in their current Kubernetes cluster. This is a well-defined file storage framework that can increase the beak, handle a large number of users, and distribute any file system on the disk system with support for various functions.

Clusters also use industry standards conventions such as SMB and NFS for network file systems and support identification of replication, cloning, and red bits to detect data corruption.

Hi, my name is Martin and I am a teacher at Davis School of sports, Sacramento. Learning new stuff and writing about the latest topics is my hobby. I came up with Blue Water Fishing Classic so that knowledge can be shared without any limitations.

Hi, my name is Martin and I am a teacher at Davis School of sports, Sacramento. Learning new stuff and writing about the latest topics is my hobby. I came up with Blue Water Fishing Classic so that knowledge can be shared without any limitations.